Keras Model Fit Learning Rate

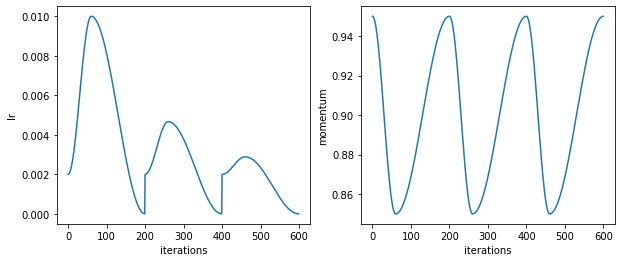

Keras learning rate finder.

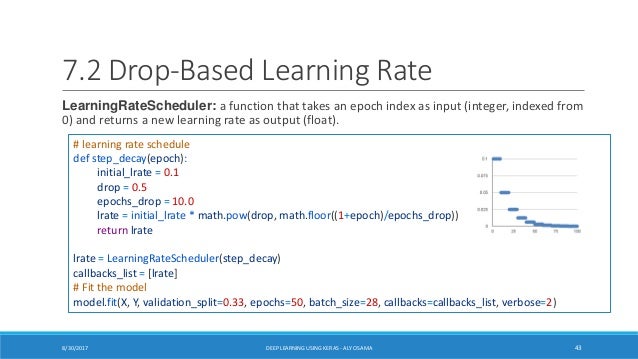

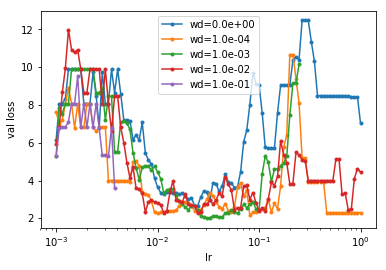

Keras model fit learning rate. The mathematical form of time based decay is lr lr0 1 kt where lr k are hyperparameters and t is the iteration number. Looking into the source code of keras the sgd optimizer takes decay and lr arguments and update the learning rate by a decreasing factor in each epoch. In the first part of this tutorial we ll briefly discuss a simple yet elegant algorithm that can be used to automatically find optimal learning rates for your deep neural network. Exponentialdecay initial learning rate 1e 2 decay steps 10000 decay rate 0 9 optimizer keras.

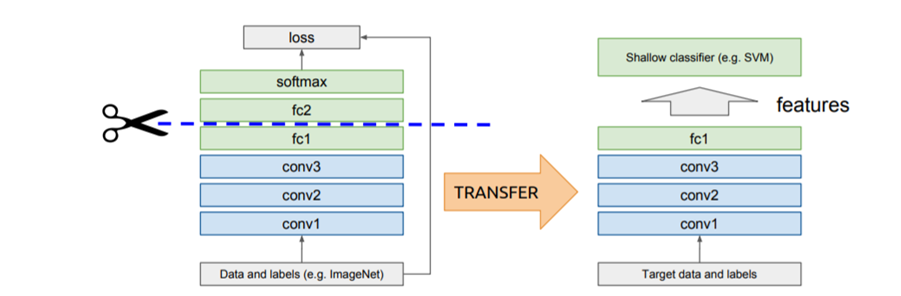

Self decay self iterations. This guide covers training evaluation and prediction inference models when using built in apis for training validation such as model fit model evaluate model predict. In the first part of this guide we ll discuss why the learning rate is the most important hyperparameter when it comes to training your own deep neural networks. Lr schedule keras.

If you are interested in leveraging fit while specifying your own training step function see the. From there i ll show you how to implement this method using the keras deep learning framework. I saw that edersantana my hero was working on changing the learning rates during training. I m new using keras i want to get the learning rate during training lstm with sgd optimizer i have set the decay parameter it seems it works but when i use model optimizer lr get value to read the learning rate it didn t change at all my setting is as follows.

Constant learning rate time based decay. This blog post is now tensorflow 2 compatible. No change for a given number of training epochs. Setup import tensorflow as tf from tensorflow import keras from tensorflow keras import layers introduction.

I believe that commit was made here. The learningratescheduler callback allows us to define a function to call that takes the epoch number as an argument and returns the learning rate to use in stochastic gradient descent. This blog post is now tensorflow 2 compatible. You can use a learning rate schedule to modulate how the learning rate of your optimizer changes over time.

Np epoch init 1 sgd sgd lr lr init decay decay init. 536 my question is that i normally have my model train on one epoch do some predictions and then train the next epoch. We ll then dive into why we may want to adjust our learning rate during training. We can implement this in keras using a the learningratescheduler callback when fitting the model.